Building Agents with MCP: A short report of going to production.

We shipped an agent to handle financial reconciliation. We found that "Hydra prompts" usually make things worse. The fix wasn't better instructions, but better data surfaces via MCP

We work on a finance product. Now I’m writing this blog for my engineering peers, so I don’t expect anyone to do a deep dive into the chaotic world of Financial Operations.

Let me just tell you that working on this for a while made me appreciate that supply chains work at all.

One of the ugliest recurring problems is reconciling records when there isn’t a clean join key.

In the real world:

An invoice references a PO that’s missing in the bank transfer.

Names don’t match exactly (“Google” vs “Alphabet”).

Dates drift (invoice date vs payment date vs settlement date).

Amounts drift (fees, FX, partial payments).

Remittance info is missing, truncated, or inconsistent.

Mix and match all of the above

A meaningful fraction of cases can’t be auto-reconciled with a deterministic rule like (amount, date, counterparty) and must be investigated using circumstantial evidence.

What ended up working best

We tried a couple of approaches to solve this problem. We tried to see if dumping a couple smaller Excel files into Gemini would work (it didn’t). We also tested if an Assistant could come up with a rule set (it could for the easy cases, but failed on edge cases and didn’t create any rules that were out of the dataset).

What worked really well was an Agent with internal MCP access to the underlying data stored in a relational database.

For anyone not following MCP: it’s an open standard for connecting an AI client to external tools/data sources in a structured way. It’s basically a standard port that lets a model discover and call tools rather than scraping everything through natural language.

A bit surprisingly gpt-5-mini worked as well on this problem as the more powerful models, but that’s not the main thread of this article.

We made the tool surface area tiny on purpose

Our data access MCP server only exposes three capabilities:

List available tables

Describe a table (schema/columns; optionally comments)

Read-only querying (with Limits)

It’s a quite boring design, but by limiting the available paths while exposing dynamic functionality we implicitly tell the model how to do the task.

In a finance environment, write tools are a trust-killer anyway: what users say they want (“automate it”) often diverges from what they accept emotionally (“show me, let me approve”).

Also, from a safety perspective: the MCP ecosystem has already had very real issues with misconfigured servers exposed to the internet and prompt injection, so you want to assume every tool needs strict access control, auditing, and least privilege.

The one caveat: semantics matter a lot

This only works if table & column names are semantically meaningful and (ideally) columns/tables have descriptions or comments that match domain language

If the schema looks like T_014, col_7, x_value (hello SAP), the agent would need to solve internal archaeology first.

In practice, we found the database surface becomes the context layer. The LLM learns how to think about the task through names and structure. In other words: The schema is the prompt.

How the agent actually reconciles

The agent’s behavior looks surprisingly like a decent analyst:

Try perfect matches first: Match based on exact amount, exact reference / invoice number and strict date windows

Then relax constraints: fuzzy name matches, date tolerance (settlement vs invoice vs posting), grouping/splitting (one payment covering multiple invoices). Start pulling data windows instead of single rows.

Accumulate evidence until it can decide: match, partial match, dispute / needs review, everything is messed up.

If you want a math analogy: a chunk of reconciliation is basically an assignment problem and, in many-to-one or one-to-many cases, it starts to resemble subset-sum / knapsack (“which set of invoices best explains this payment?”). Soft constraints like names and dates acting as additional signals.

The model is not literally running the Hungarian algorithm, but it does perform a kind of iterative constrained search: strict -> relaxed -> stop when confidence is high enough.

Results: it handled our recurring hard cases

We tested this against ~20 frequent edge cases on production-level data (things that had historically required humans). The agent was able to recognize and resolve all of them in the way our ops team would expect.

Auditable reasoning trails matter as much as end results in Finance Ops. The Agent is also outputting decision making explanations like

“Amount matches exactly”

“Counterparty name differs but resolves via known alias pattern”

“Payment date aligns with settlement date range”

“Only one candidate fits all constraints; next best candidate breaks constraint Y”

It’s immensely helpful for users to verify results or to understand what has already been tried.

(We keep humans-in-the-loop when it’s ambiguous; we’re not claiming full autonomy in a high-stakes workflow.)

Where it fails

The main failure mode isn’t hallucination. Despite the noise in socials, hallucinations haven’t been an issue in tasks that are operating on underlying data, be it a database or a code repository.

A missing necessary signal in the data that the Agent can see is an issue though.

Example: Invoice states amount X. Then after bank fees, X − 50 arrives but also remittance info is missing and multiple invoices around that value exist.

If you don’t have a fees table, or a reliable expected fee model, the agent may overfit to circumstantial fields (date/name proximity) and end up with an imperfect match even though no clean knapsack-like solution exists.

The obvious fix is add the missing data (fees, remittance enrichment, counterparty identifiers). Most of the time it doesn’t work like that, simply because your dependency on getting the data in is another person.

So, usually we have to admit it’s ambiguous and make it simple for the operator to execute their internal playbook for that case, for example by forwarding the task to another agent that requests info from the customer. But that’s a topic for another post.

Observability: What to log

Understanding how the agent decides is useful.

We collect the user prompt, the sequence of MCP calls (tables described + queries executed) and the intermediate outputs that lead to the final decision

This is basically a replayable trace of the investigation, which is extremely helpful in debugging and building evals.

(And for the obvious governance point: log retention + access controls matter a lot here.)

Prompting is a weak lever and “Hydra prompts” are real

This was another surprising lesson.

Keeping system prompts minimal and generic usually helped. When we tightened prompts to fix a failure, we often got a Hydra effect: edge case A improved, edge cases B, C and D got worse.

In practice, LLMs overweigh what you tell them right now (prompt salience / instruction dominance). So adding more instructions often causes over-indexing and reduces generality.

With that in mind, the strongest way we found to improve behavior was improving the semantic quality of the MCP + data layer over working on the prompts.

This shifted our work from prompt context engineering to data access context engineering.

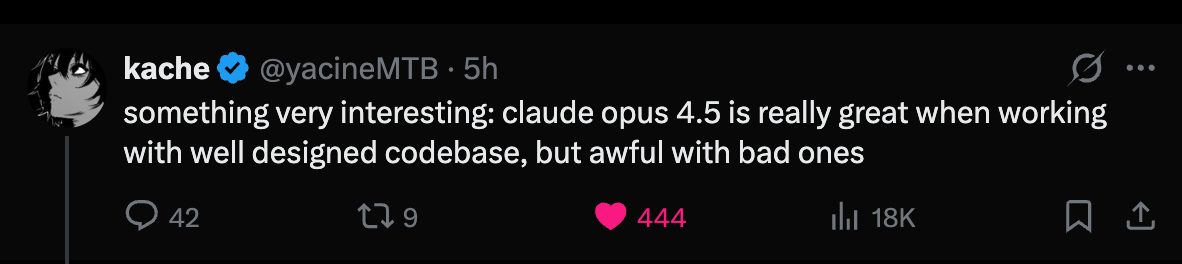

This pattern matches what we see in coding agents

The only agentic use case at scale right now is coding (I’m a skeptic of the actual vs. marketed scale of AI SDRs).

And what made coding agents work wasn’t a perfect prompt. It was the ecosystem of searchable codebases, clear file/module names, fast feedback loops (tests, linters) and tools that give agents discoverability (mostly grep).

That’s the same story here: make the right context and affordances available, and the agent will often figure out the procedure without you teaching it step-by-step.

It also matches broader “AI-in-production” survey data: teams report heavy use of database access and growing use of MCP/tool calling, plus persistent pain around evals and governance (Theory Ventures AI in Practice Survey 2025).

Closing takeaways

Agents can already do surprisingly high-level white-collar investigation work (at least in scoped, tool-grounded settings).

Prompting is useful, but it’s not a reliable primary control knob; it’s easy to overfit and regress.

The biggest leverage is building a high-quality semantic data surface (schema, views, descriptions, access patterns) and restricting tools to what you can govern.

If you’ve shipped something similar (reconciliation, audit, fraud ops, compliance investigations, etc.), I’d love to compare notes, especially around eval design and how you handle true ambiguity.